Rules, rule the world

We provide a historical perspective on AI development and the role of rules in mission-critical systems.

If engineering difficulty has a pinnacle today this must be in data science domains that combines ML, optimal control and planning. Autonomous cars and humanoids from Boston Dynamics fit the bill.

Initially there were rules.

- In the 1980s knowledge-base systems that hard-coded knowledge about the world in formal languages.

- IF this happens, THEN do that.

- They failed to get significant traction as the number of rules that are needed to model the real world exploded.

- However, they are still in use today in vertical modeling domains e.g. fault management. For example Rule Based Engines are used today in many complex systems that manage mission critical infrastructures e.g. ONAP.

The introduction of advanced AI methods few years ago, created a situation we can explain with the following analogy: In the same way that people hedged the risk associated with the introduction of steam engines in the 19th century by maintining the masts, today we hedge the risk associated with AI, by avoiding to rely on it for mission critical decisions.

A nautical analogy on where we are today on AI for mission critical systems. Can you notice anything strange with this ship (Cumberland Basin, photo taken April 1844)?

A nautical analogy on where we are today on AI for mission critical systems. Can you notice anything strange with this ship (Cumberland Basin, photo taken April 1844)?

To put order into the many approaches and methods for delivering AI in our lives, DARPA classified AI development in terms of “waves”.

Wave I: Decoupled systems put together with rules

Wave II: End-to-end differentiable decision making systems

Wave III: AGI - obvioulsy this is not it and noone knows for certainty when we will see humanoids able to build other humanoids.

In the 1980s rule-based engines started to be applied in what people called expert-systems. In this example you see a system that performs highway trajectory planning. A combination of cleverly designed rules does work and offers real time performance but cannot easily generalize and therefore have acceptable performance in other environments. The bulk of self-driving technology today is still based on this approach. Note that the perception subsystem is based on deep learning but anything else is non-differntiable.

Wave II srarted after 2015 when initial experiments on immitation learning (also known as behavioral clonning) were made. Despite the end to end differentiability of the system, the system is not able to generalize without vast ammounts of training data that require many human drivers.

Wave III is at present an active research area driven primarily from our inability to implement with just deep neural networks things like long-term goal planning, causality, extract meaning from text like humans do, explain the decisions of neural networks, transfer the learnings from one task to another, even dissimilar, task. Artificial General Intelligence is the term usually associated with such capabilities.

The role of simulation in AI

Further, we will see a fusion of disciplines such as physical modeling and simulation with representation learning to help deep neural networks learn using data generated by domain specific simulation engines.

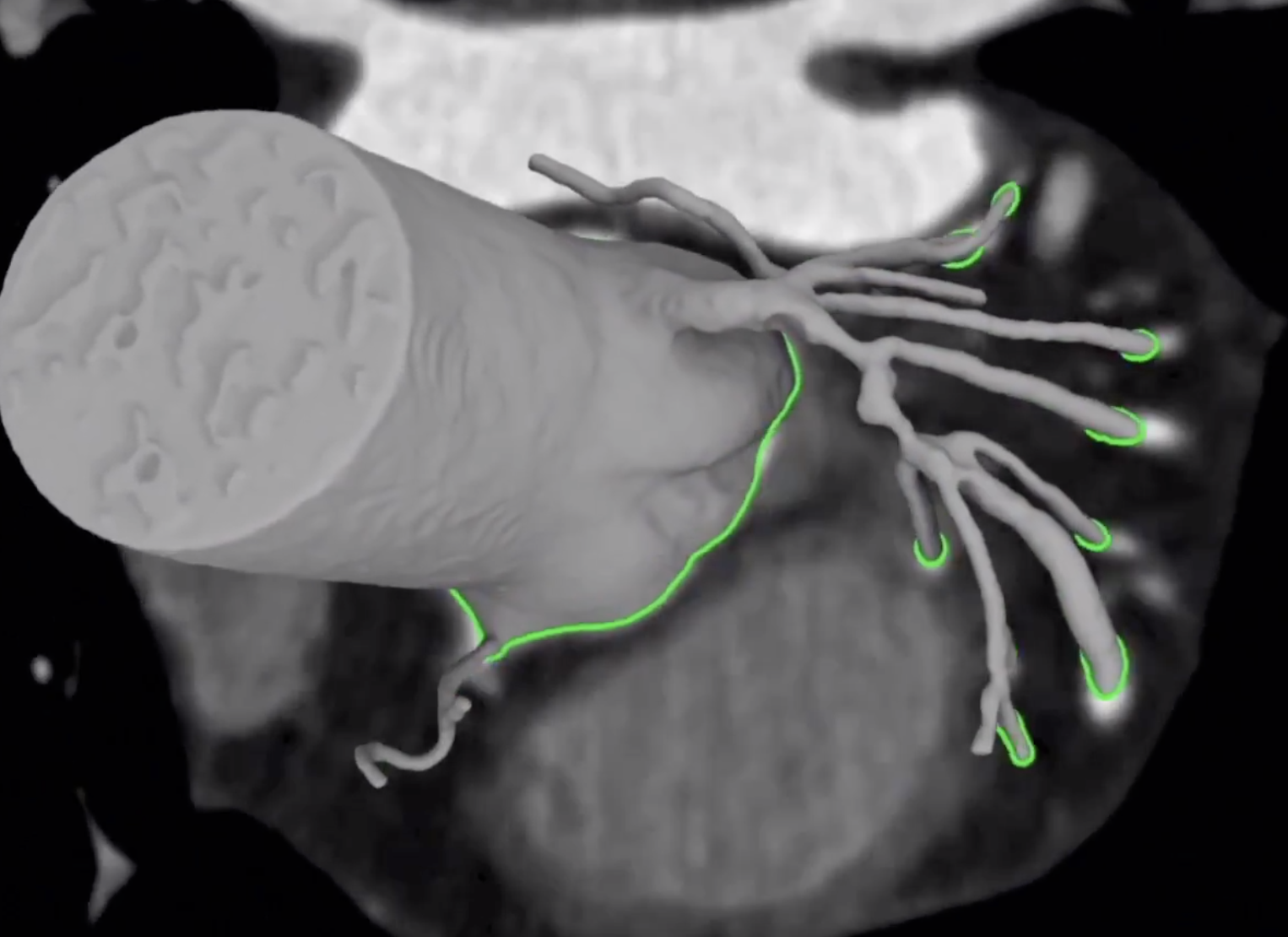

Reveal the stenosis:Generative augmented physical (Computational Fluid Dynamics) modeling from Computer Tomography Scans

Reveal the stenosis:Generative augmented physical (Computational Fluid Dynamics) modeling from Computer Tomography Scans

For example in the picture above a CFD simulation is used to augment ML algorithms that predict and explain those predictions. I mission critical systems (such as medical diagnostic systems) everything must be explainable.

Simulation is also used to create photorealistic synthetic worlds and embed agents therein to allow engineers to design and validate agent behavior as shown in Fadaie (2019). A demonstration of such capability is shown here using NVIDIA’s Omniverse - a photorealistic 3D creation and simulation environment.