AI Agents for Robotics

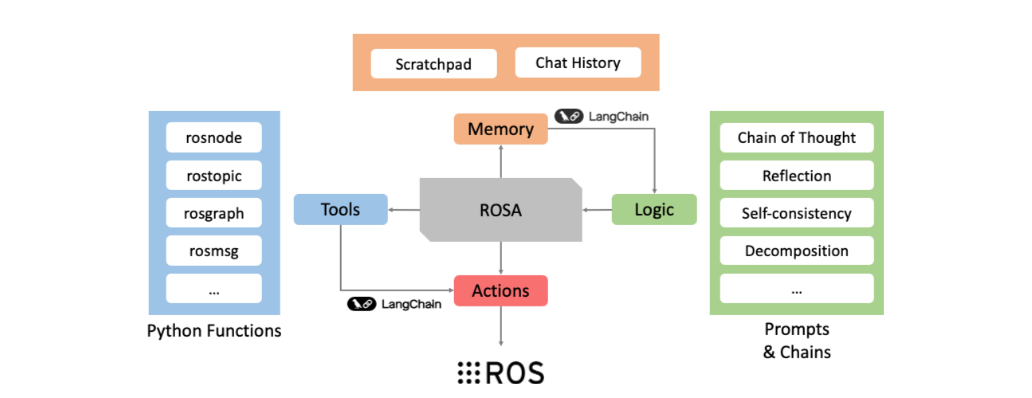

In this project we use AI agents based on the ReAct principles for robotics. Our aim is to design a system whose principles are based on earlier work at JPL that allows the development of agents capable of supporting real time interactions with robots for algorithmic design (simulation mode) or field verification/testing and monitoring. Due to the time constraints of this project the next paragraph specializes the project goals into a narrower scope, with the understanding that what is built can be considered to be a core capability that can be extended in the future.

Project Goals and Strategy

Your goal is to develop a new architecture that supports the use cases outlined in the ROSA paper, but with the following important differences:

AI Agents should be decoupled from the simulator (ROS). The decoupling is achieved by establishing a swappable message passing mechanism between ROS and the AI agent. This mechanism is based on nats.io and jetstream.

AI Agents should be decoupled from the libraries that define the agent abstraction to the degree possible. Langchain is a bloated library and we need a canonical approach to AI agent designs - this will be facilitated by using the pydantic.ai abstractions for agents that is build on the foundations of structured data validation library

pydanticand has a python-centric design while still allowing graph-based definitions of the agent’s functionality.

Your strategy should be to always have a working system even if this system initially is just a replica of the ROSA functionality. Then by swapping out Langchain with pydantic_ai library constructs you maintain the functionality of the system while improving its performance and maintainability. Avoid rolling out nats.io and jeststream initially to implement decoupling. You can do that later as time allows.

Replicate ROSA Milestone

Use this maze environment and launch the robot, the gazebo simulator and RViz.

You need to have access to an LLM reasoning API. See here for free endpoints you can use. You may start with hugging face serverless endopoints but for few $ you can use the OpenAI API or Anthropic. Be careful to set a spending limit of $10 in your account settings. Do not use advanced models before trying the reasoning capabilities of the free APIs first.

Replicate the chats that you have seen in the ROSA paper with respect to the ROS CLI only such as list nodes, subscribe to a topic, etc.

Enhance ROSA Milestone

Introduce

pydantic_aito define the agent’s functionality. There is a distintct (but unverified) possibility that both langchain and pydantic_ai can be used at the same time - this is useful for testing purposes.Enable the pydantic agent to move the robot to a goal location in the maze. Goal location maybe absolute or semantic eg “go in front of a table/bench”. The agent should be able to use the LLM reasoning API to reason about the maze and the goal location and the robot must execute successfully the instruction while streaming responses back to the chat interface.