Chat with your Video Library

In this project you will be exposed to the engineering aspects of Retrieval Augmented Generation (RAG) systems as in this key book reference.

RAG combines the strengths of retrieval-based and generation-based models, enabling the model to retrieve relevant information from a large corpus and generate a coherent domain-specific response.

No shortcuts such as heavy usage of langchain and other bloated high level RAG frameworks are going to be acceptable as solutions to this project. You need to understand the components of the RAG system and how they interact with each other.

Don’t attempt to do this project before reading the overview sections of RAG in the book - you need to clone the book’s repository as well if you are to implement Option 1 below.

Project Goals and Strategy

In our case the task is to build a chatbot application where students are able to ask questions about the course and the bot should be able to generate responses that are grounded to specific video segments (clips) where the segments are the relevant video clips / segments from the course youtube channel.

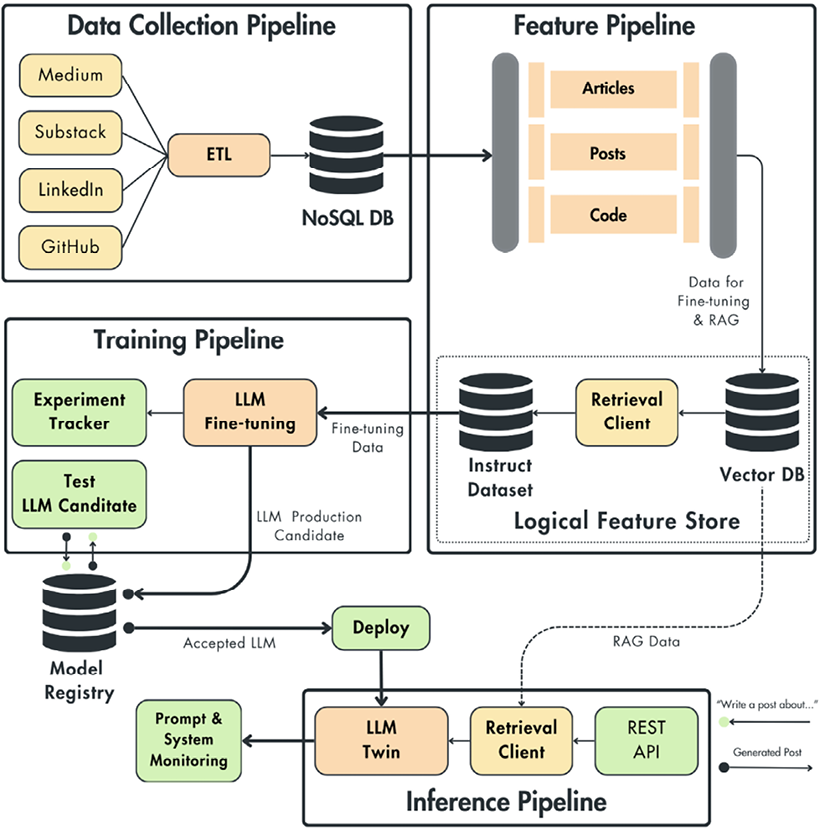

The individual components of a general RAG system are shown in Figure 1.

You have two options to deal with the complexity of the project:

Option 1

You will be integrating into the book’s repository additional elements into the components shown in Figure 1.

Option 2

You will be using the book’s repository as a reference and you will be implementing the components of the RAG system from scratch. You can use a canonical RAG implementation to implement the components of the RAG system. The later approach is just an example and you are free to use any other RAG framework you know.

Either of the two options is acceptable. The only requirement is that you need to be able to show a working RAG system at the end of the project. Your strategy is to split up the project into multiple milestones and to have a proof of concept (POC) with all the components interacting with each other even if the end to end flow fails. Do not spend a lot of time on each component at the expense of nothing to show by the project deadline. Each iteration should improve the quality of the components. Despite that you have no per-milestone deadlines, we can guarantee that you will be unable to finish the project if you start late or cant keep up.

Data Collection Pipeline (ETL) Milestone

Here you will use the Huggingface Dataset repository and develop a pipeline that is able to stream the video dataset that is stored in webdataset format, decode its frames and other metadata as described here and store them in the MongoDB database.

Its critical that each image stored in MongoDB must be aligned with the corresponding subtitle. It is also important to understand how to remove redundancy such as multiple images in the video showing the same content.

We are in the process of populating the streamable webdataset.

Finetuning Milestone

Follow instructions from a similar finetuning exercise to finetune the model to the required domain - captioned handwritten images. You are free to use other baseline VLM (Vision Language Models) depending on your hardware such as Gemma 3.

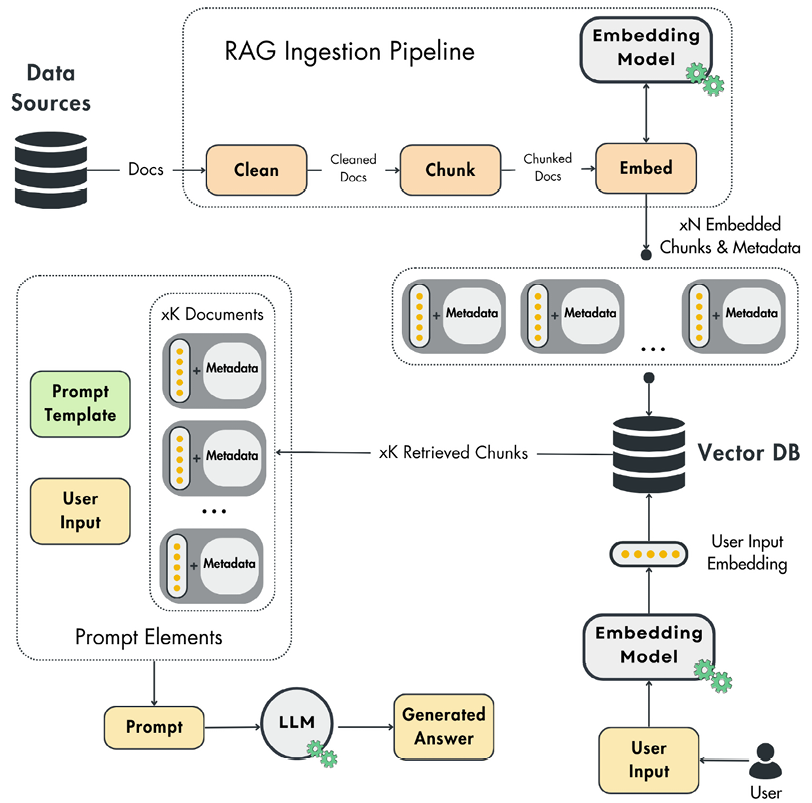

Clearly explain how you implemented semantic chunking in your implementation.

PS: The finetuning tutorial linked must work in free colab but you may consider paying 1 month fee to Google (or use other providers) to avoid issues with finetuning.

Featurization Pipeline Milestone

Implement the featurization pipeline that will convert the chucked data (images and subtitles) into a format that can be used by the RAG model. The featurization pipeline should be able to store the featurized data as vectors into Qdrant.

Baseline Featurization and Retrieval Pipeline

Retrieval Milestone

Implement the retrieval pipeline that will accept a query such as “Explain how ResNets work in the videos.” and return the most relevant video segments (clips) from the database.

Deploying the App Milestone

Develop a gradio app that will allow the user to interact with the RAG system using Ollama and the facility to pull your model from HF hub. The app should be able to answer the following questions with the video clip(s). You need to use streaming for producing the answers.

In addition to the app, you need to ensure that there is a demonstration.ipynb notebook in your github repo where the questions and related video clips are shown (video clips are few MBs and should not be an issue to commit them as part of your repo).

There should be a minimum of 3 video clips. Feel free to add more questions that highlight the capabilities of your system.

- “Using only the videos, explain how ResNets work.”

- “Using only the videos, explain the advantages of CNNs over fully connected networks.”

- “Using only the videos, explain the the binary cross entropy loss function.”

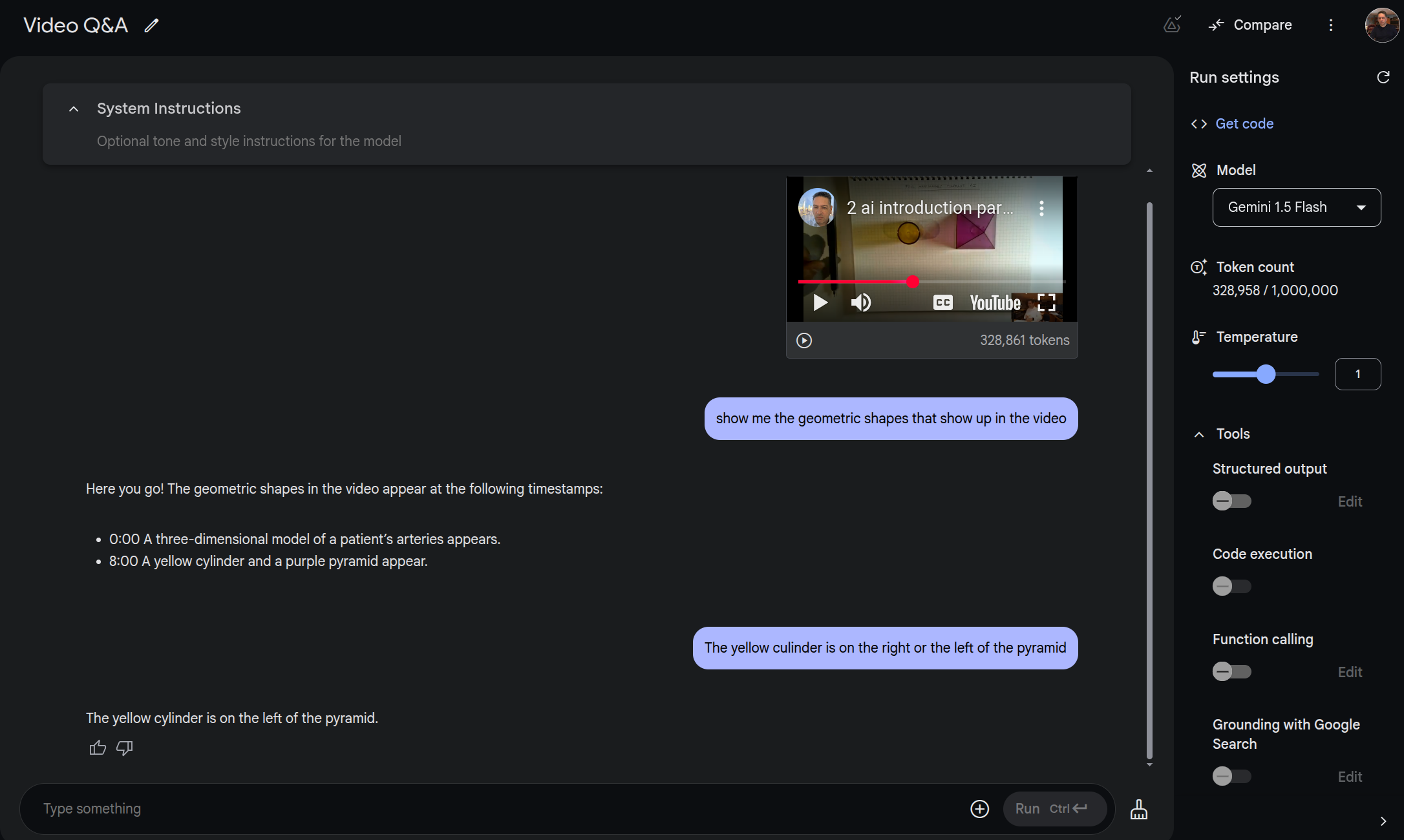

Example Video Q&A

Google’s AI Studio has a video Q&A capability that is similar to this project. They can ground the question to the timestamps of the video but the limitations of the system is that it can only address one video and the video segments although identifiable via timestamps, they are not produced at the output.

For the lecture video input, we asked questions to the model as shown below. The first set of questions was asked in the Gemini 1.5 Flash that is not as powerful as other reasoning/thinking models. Nevertheless the model was able to answer such questions very well and this is especially notable in the case of the last question that relates the location of two objects.

A more powerful reasoning model will provide chains of thought to questions as shown below:

What is a rational agent in the video

Here’s my thought process for answering the request “what is a rational agent in the video”:

Identify the core question: The user wants to know the definition or description of a “rational agent” as presented in the provided video. This is crucial – the answer must be based only on the video content, not external AI knowledge.

Scan the transcript for keywords: I’ll look for the specific term “rational agent” and related concepts like “rational agent approach,” “rationality,” “optimal,” “objective,” “goal,” etc.

Locate relevant segments:

- The term “Rational Agent approach” appears explicitly around 5:55.

- The speaker starts discussing this approach at 17:55.

Analyze the relevant segment (17:55 - 18:18):

- The speaker introduces “The Rational Agent approach”.

- Key phrases used to describe it:

- “A rational agent acts to achieve the best outcome.”

- “The rational approach encompasses the syllogism and Turing-test approaches.” (This suggests it’s a broader category or builds upon others).

- “We still need probably correct inference and the formal representations of logic as well as the ability to perceive, communicate, and learn to achieve a good outcome.” (This lists capabilities needed for a rational agent, but isn’t the definition itself).

- “We need to generalize these approaches to include ‘good-enough’ inference and adaptation to the changing environment contexts that the agent is facing without giving up on the mathematical formality that utility theory allows us to design such agents.” (Again, requirements for the approach, mentioning utility theory).

- “The agent facing a fire is an instructive example. There maybe no time for optimal reasoning to a conclusion (e.g., run) but a simple reflexive plan can offer the best outcome.” (This provides an example illustrating the goal – best outcome – even if reasoning isn’t perfectly optimal due to constraints like time).

- “…we will be spending quite a lot of time [on] the so-called rational agent approach.”

- “In the rational agent approach, we have an agent that finally, at the end of the day, will need to satisfy an objective.”

- “And that objective sometimes is called utility function, sometimes we will meet it as value functions…”

- “…the agent is going to move and act in this kind of environment in the in this kind of optimal way.”

- “So that is the rational agent…”

Synthesize the information from the video: Based only on the speaker’s words in the relevant sections, a rational agent is described as:

- An agent that acts to achieve the best outcome.

- It needs capabilities like perception, communication, learning, inference (even “good-enough” inference), and adaptation.

- It operates based on mathematical formality, often involving utility theory.

- Its actions aim to satisfy an objective, which might be represented by a utility function or value function.

- It aims to act in an “optimal way” within its environment to achieve its goal/objective.

- It’s presented as one of the four main approaches to AI discussed in that section (alongside Turing Test, Cognitive Model, Syllogism-based).

Format the answer: Present the synthesized definition clearly, quoting or paraphrasing directly from the video’s content. Include the timestamps where the relevant information is discussed. Avoid adding external definitions or interpretations. Ensure the answer directly addresses “what is a rational agent in the video”.

Refine: Read through the generated answer. Does it accurately reflect what the speaker said? Is it concise? Does it directly answer the user’s question based only on the video? Yes. The key defining characteristic mentioned repeatedly is acting to achieve the best outcome or satisfying an objective, often in an optimal way, using concepts like utility functions.

Based on the video, the speaker describes the Rational Agent approach starting around 17:55. Here’s a summary of how it’s defined:

- Core Goal: A rational agent acts to achieve the best outcome. (17:55, 18:04)

- Objective-Driven: The agent needs to satisfy an objective. This objective is sometimes represented by a utility function or value functions. (18:06 - 18:19)

- Optimal Action: The agent aims to act in an optimal way within its environment to achieve its goal. (18:07, 18:43)

- Necessary Capabilities: To achieve this, the agent needs abilities like perceiving, communicating, learning, inference (even “good-enough” inference), and adapting to changing environments. (17:59 - 18:06)

- Formal Basis: The approach often relies on mathematical formality, specifically mentioning utility theory. (18:05)

In essence, the video presents a rational agent as an entity designed to take actions that maximize its chances of achieving a specific goal or objective, often defined mathematically (e.g., maximizing a utility function), given its perceptions and capabilities.