World Models

For each problem we can define a number of world models each representing every possible state (configuration) of the environment that the agent may be in.

Wumpus World

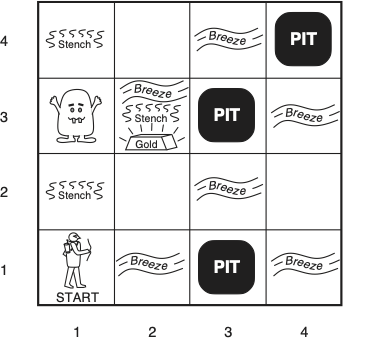

We will use a very simple world known in the literature as the Wumpus world (a cave) shown below.

Wumpus World. It was inspired by a video game Hunt the Wumpus by Gregory Yob in 1973. The Wumpus world is a cave which has 4/4 rooms connected with passageways. So there are total 16 rooms which are connected with each other. We have a agent who will move in this world. The cave has a room with a beast which is called Wumpus, who eats anyone who enters the room. The Wumpus can be shot by the agent, but the agent has a single arrow. There are pit rooms which are bottomless, and if agent falls in such pits, then he will be stuck there forever. The agent’s goal is to find the gold and climb out of the cave without falling into pits or eaten by Wumpus. The agent will get a reward if he comes out with gold, and he will get a penalty if eaten by Wumpus or falls in the pit.

Wumpus World. It was inspired by a video game Hunt the Wumpus by Gregory Yob in 1973. The Wumpus world is a cave which has 4/4 rooms connected with passageways. So there are total 16 rooms which are connected with each other. We have a agent who will move in this world. The cave has a room with a beast which is called Wumpus, who eats anyone who enters the room. The Wumpus can be shot by the agent, but the agent has a single arrow. There are pit rooms which are bottomless, and if agent falls in such pits, then he will be stuck there forever. The agent’s goal is to find the gold and climb out of the cave without falling into pits or eaten by Wumpus. The agent will get a reward if he comes out with gold, and he will get a penalty if eaten by Wumpus or falls in the pit.

The Performance Environment Actuators and Sensors (PEAS) description is summarized in the table.

| Attribute | Description |

|---|---|

| Environment | Locations of gold and wumpus are uniformly random while any cell other than the starting cell can be a pit with probability of 0.2. One instantiation of the environment that is going to be fixed throughout the problem solving exercise is shown in the figure above. The agent always start at [1,1] facing to the right. |

| Performance | +1000 points for picking up the gold. This is the goal of the agent. |

| −1000 points for dying. Entering a square containing a pit or a live Wumpus monster. | |

| −1 point for each action taken so that the agent should avoid performing unnecessary actions. | |

| −10 points for using the arrow trying to kill the Wumpus. | |

| Actions | Turn 90◦ left or right |

| Forward (walk one square) in the current direction | |

| Grab an object in this square | |

| Shoot the single arrow in the current direction, which flies in a straight line until it hits a wall or the Wumpus | |

| Climb out of the cave - this is possible only in the starting location [1,1] | |

| Sensors | Stench when the Wumpus is in an adjacent square — directly, not diagonally |

| Breeze when an adjacent square has a pit | |

| Glitter when the agent perceives the glitter of the gold in the current square | |

| Bump when the agent walks into an enclosing wall (and then the action had no effect) | |

| Scream when the arrow hits the Wumpus, killing it. |

The environment is:

- Static: It is static as Wumpus and Pits are not moving.

- Discrete: The states are discrete.

- Partially observable: The Wumpus world is partially observable because the agent can only perceive the close environment such as an adjacent room.

- Sequential: The order is important, so it is sequential.

But why this problem requires reasoning? Simply put, the agent needs to infer the state of adjacent cells from its perception subsystem in the current cell and the knowledge of the rules of the wumpus world. These inferences will help the agent to only move to an adjacent cell when it has determined that the cell is OK for it move into.

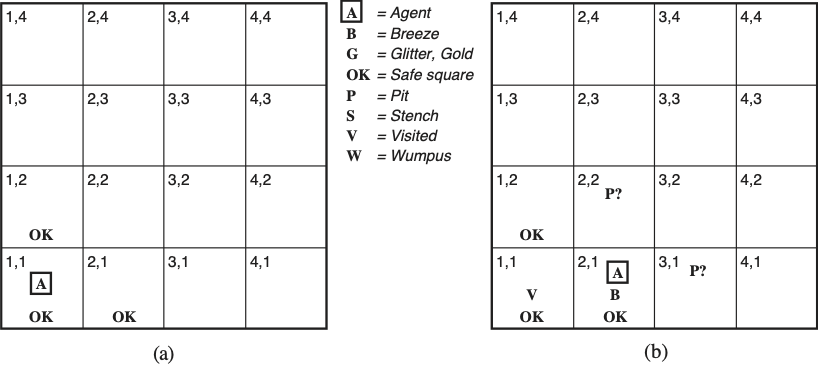

Agent A moving in the environment infering the contents of adjacent cells. (a) Initial environment after percept [Stench, Breeze, Glitter, Bump, Scream]=[None, None, None, None, None]. (b) After one move with percept [Stench, Breeze, Glitter, Bump, Scream]=[None, Breeze, None, None, None].

Agent A moving in the environment infering the contents of adjacent cells. (a) Initial environment after percept [Stench, Breeze, Glitter, Bump, Scream]=[None, None, None, None, None]. (b) After one move with percept [Stench, Breeze, Glitter, Bump, Scream]=[None, Breeze, None, None, None].

| Environment State | Description |

|---|---|

| (a) | Agent A starts at [1, 1] facing right. The background knowledge β assures agent A that he is at [1, 1] and that it is OK = certainly not deadly. |

| Agent A gets the percept [Stench, Breeze, Glitter, Bump, Scream]=[None, None, None, None, None]. | |

| Agent A infers from this percept and β that its both neighboring squares [1, 2] and [2, 1] are also OK: “If there was a Pit (Wumpus), then here would be Breeze (Smell) — but isn’t, so. . .”. The KB enables agent A to discover certainties about parts of its environment — even without visiting those parts. | |

| (b) | Agent A is cautious, and will only move to OK squares. Agent A walks into [2, 1], because it is OK, and in the direction where agent A is facing, so it is cheaper than the other choice [1, 2]. Agent A also marks [1, 1] Visited. |

| Agent A perceives [Stench, Breeze, Glitter, Bump, Scream]=[None, Breeze, None, None, None]. Agent A infers: “At least one of the adjacent squares [1, 1], [2, 2] and [3, 1] must contain a Pit. There is no Pit in [1, 1] by my background knowledge β. Hence [2, 2] or [3, 1] or both must contain a Pit.” Hence agent A cannot be certain of either [2, 2] or [3, 1], so [2, 1] is a dead-end for a cautious agent like A. |

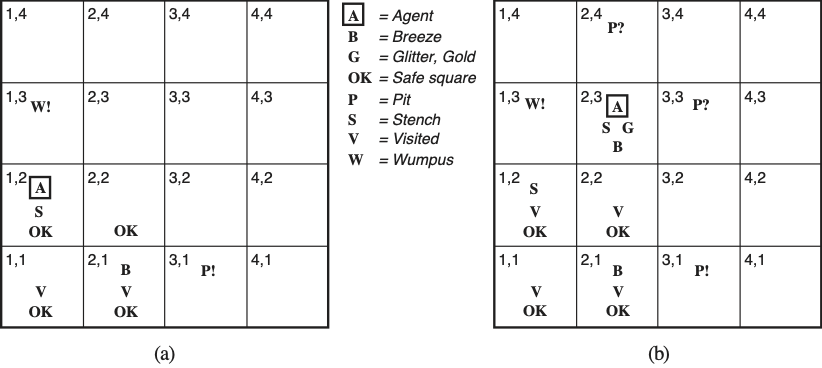

Agent moving in the environment infering the contents of adjacent cells. (a) After the 3rd move and percept [Stench, Breeze, Glitter, Bump, Scream]=[Stench, None, None, None, None]. (b) After the 5th move with percept [Stench, Breeze, Glitter, Bump, Scream]=[Stench, Breeze, Glitter, None, None].

Agent moving in the environment infering the contents of adjacent cells. (a) After the 3rd move and percept [Stench, Breeze, Glitter, Bump, Scream]=[Stench, None, None, None, None]. (b) After the 5th move with percept [Stench, Breeze, Glitter, Bump, Scream]=[Stench, Breeze, Glitter, None, None].

| Environment State | Description |

|---|---|

| (a) | Agent A has turned back from the dead end [2, 1] and walked to examine the other OK choice [1, 2] instead. |

| Agent A perceives [Stench, Breeze, Glitter, Bump, Scream]=[Stench, None, None, None, None]. | |

| Agent A infers using also earlier percepts: “The Wumpus is in an adjacent square. It is not in [1, 1]. It is not in [2, 2] either, because then I would have sensed a Stench in [2, 1]. Hence it is in [1, 3].” | |

| Agent A infers using also earlier inferences: “There is no Breeze here, so there is no Pit in any adjacent square. In particular, there is no Pit in [2, 2] after all. Hence there is a Pit in [3, 1].” | |

| Agent A finally infers: “[2, 2] is OK after all — now it is certain that it has neither a Pit nor the Wumpus.” | |

| (b) | Agent A walks to the only unvisited OK choice [2, 2]. There is no Breeze here, and since the square of the Wumpus is now known too, [2, 3] and [3, 2] are OK too. |

| Agent A walks into [2, 3] and senses the Glitter there, so it grabs the gold and succeeds. |