AI in Robotics

In Figure 1 we showcase how a robot can follow instructions in natural language - the instructions are typed in in this demo but spoken instructions very much feasible today as well.

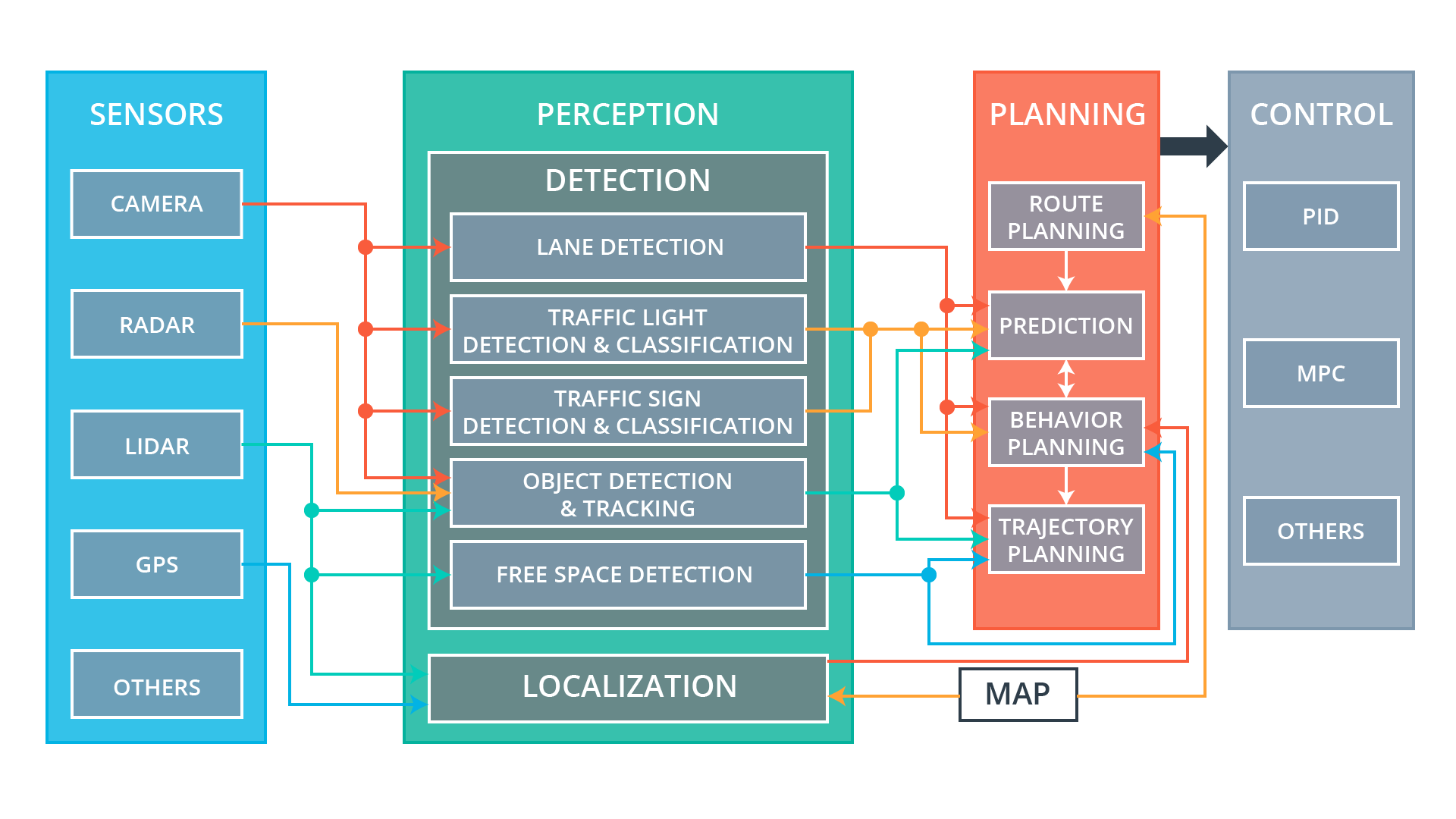

In 2023 OpenAI released ChatGPT, a large language model (LLM) that can understand and generate human-like text. This breakthrough has led to a surge in interest in AI applications across various fields, including robotics. Today’s robotics landscape is still exhibiting the aftermath of the Large Language Modeling (LLM) revolution and to understand how pervasive the impact is, its important to turn the clock back and look at a traditional autonomous vehicle architecture that is still considered state of the art as shown in Figure 2

Architecture

Sensing

- Camera: Visual input for image-based perception (lanes, signs, lights, objects).

- Radar: Detects objects and measures their speed/distance (good in poor visibility).

- LIDAR: Laser-based sensor for 3D mapping and object detection.

- GPS: Provides global position.

- Others: Can include ultrasonic sensors, IMUs, etc.

Perception

- Detection & Classification: for a plethora of objects such as traffic lights, signs, pedestrians, vehicles etc.

- Tracking: Resolve occlusions and track objects over time.

- Free Space Detection (detects drivable area)

- Localization Determines the car’s precise position in the environment, using cameras, lidar and in general sensor fusion.

Planning

- Global Planning: High-level route to the destination.

- Prediction: Predicts the future actions of other objects (cars, pedestrians).

- Behavior Planning: Decides what the vehicle should do next (stop, yield, change lane, etc.) based on predictions and goals.

- Trajectory Planning: Computes a detailed path (trajectory) for the car to follow safely and smoothly.

Control

- PID (Proportional-Integral-Derivative) and MPC (Model Predictive Control): Algorithms to control the vehicle’s steering, throttle, and braking.

All these subsystems are tightly integrated and work together to ensure the robot can navigate safely and efficiently in complex environments.

In this course we will start with the basics of these subsystem but we will gradually infuse ideas that of the last few years to dramatically transform the capabilities of robotic systems as described in ?@sec-lvm - ?@sec-vla.