def linear(x):

return -0.3 + 0.5 * x

x_train, y_train = create_toy_data(linear, 20, 0.1, [-1, 1])

x = np.linspace(-1, 1, 100)

w0, w1 = np.meshgrid(

np.linspace(-1, 1, 100),

np.linspace(-1, 1, 100))

w = np.array([w0, w1]).transpose(1, 2, 0)

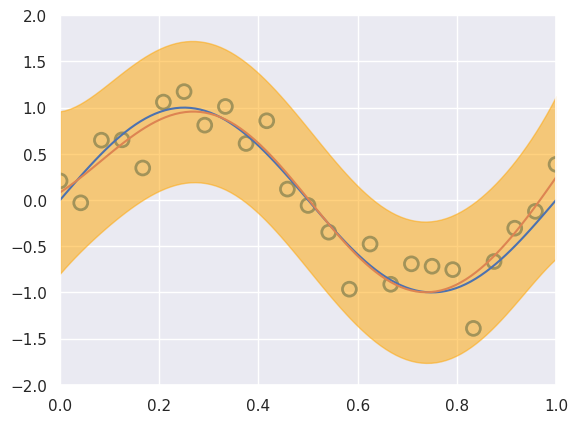

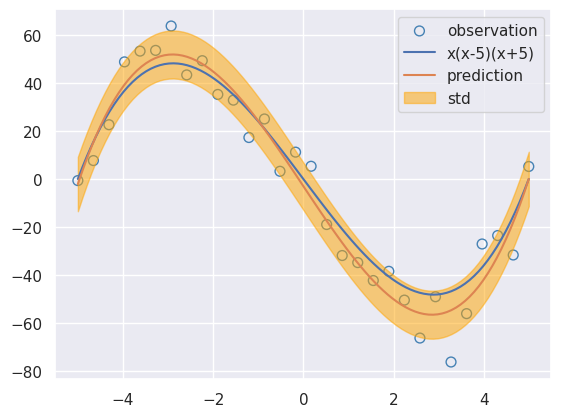

feature = PolynomialFeature(degree=1)

X_train = feature.transform(x_train)

X = feature.transform(x)

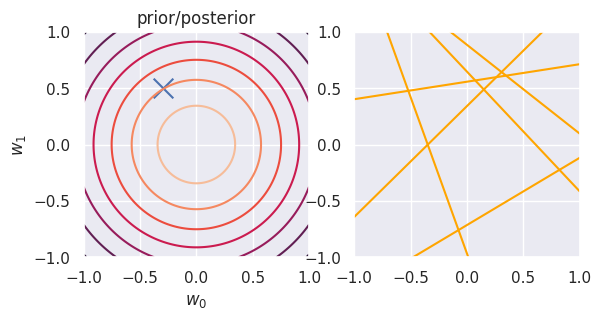

model = BayesianRegression(alpha=1., beta=100.)

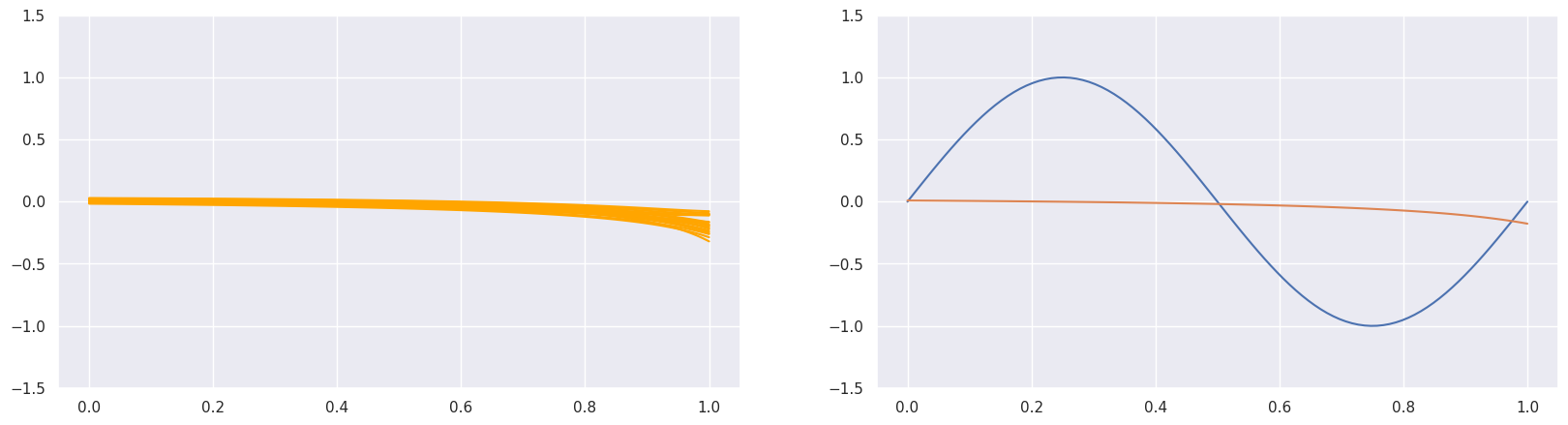

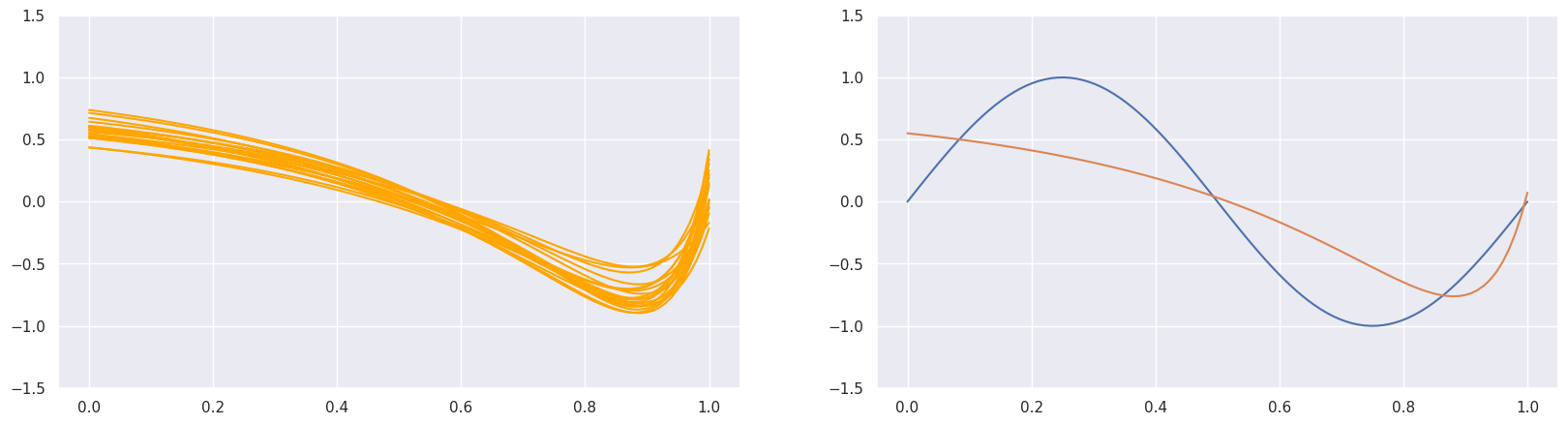

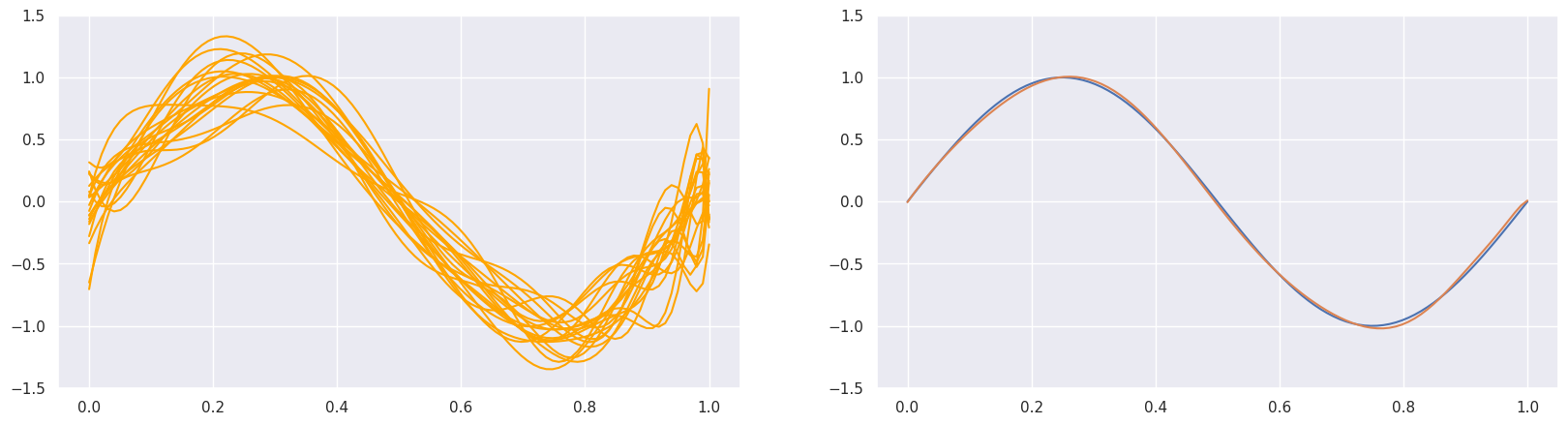

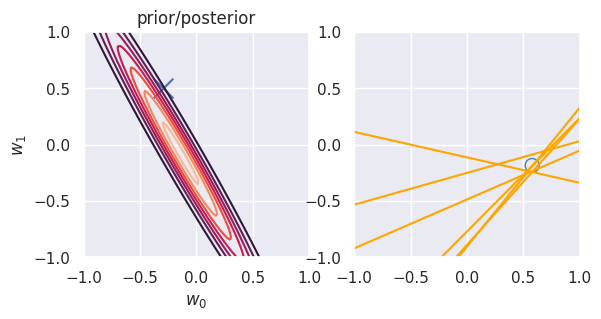

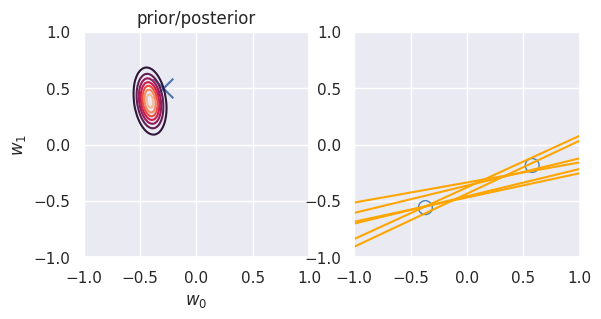

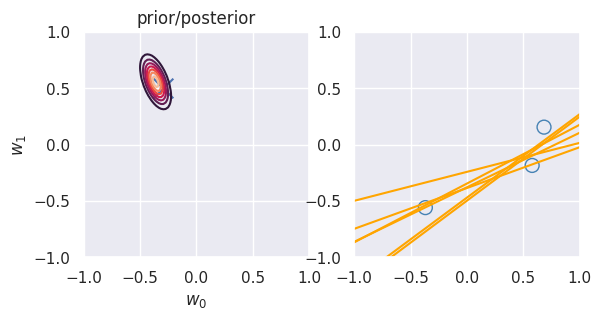

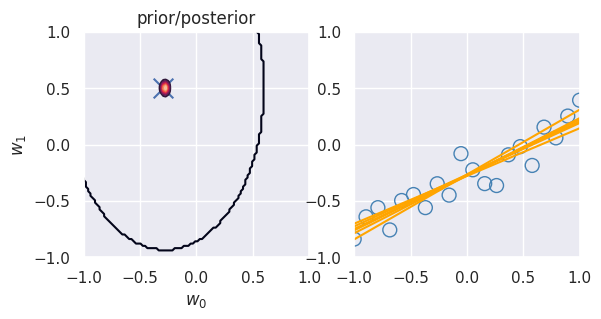

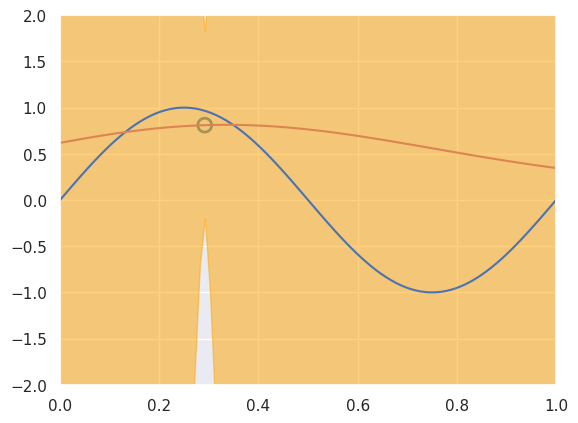

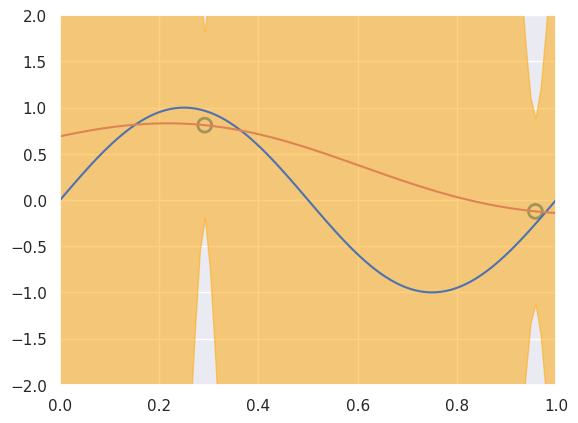

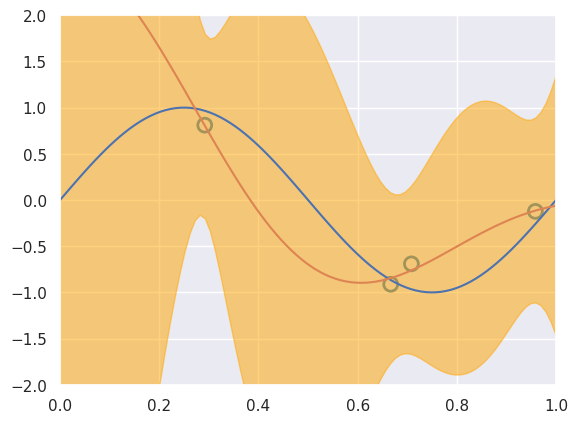

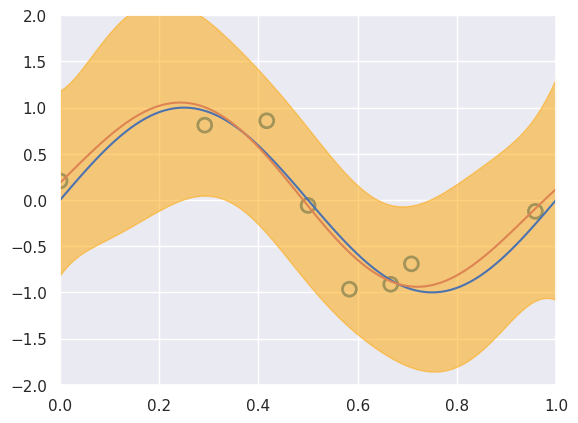

for begin, end in [[0, 0], [0, 1], [1, 2], [2, 3], [3, 20]]:

model.fit(X_train[begin: end], y_train[begin: end])

plt.subplot(1, 2, 1)

plt.scatter(-0.3, 0.5, s=200, marker="x")

plt.contour(w0, w1, multivariate_normal.pdf(w, mean=model.w_mean, cov=model.w_cov))

plt.gca().set_aspect('equal')

plt.xlabel("$w_0$")

plt.ylabel("$w_1$")

plt.title("prior/posterior")

plt.subplot(1, 2, 2)

plt.scatter(x_train[:end], y_train[:end], s=100, facecolor="none", edgecolor="steelblue", lw=1)

plt.plot(x, model.predict(X, sample_size=6), c="orange")

plt.xlim(-1, 1)

plt.ylim(-1, 1)

plt.gca().set_aspect('equal', adjustable='box')

plt.show()