In this assignment, we will be understanding how CNN’s work and how different layers extract the important features from the image. For this assignment, we will be using only pytorch libraries for CNN implementation. For GradCAM and Occlusion you are free to use the Captum library.

We invite you to watch the following video and this reference that provides the context of the assignment and describes the tools you can use to implement the explainers.

For the tasks below you will be using the dataset from Huggingface. You can use the datasets library to load the dataset.

Task-1 - Convolutional Neural Networks for Image Classification

In this task you will be training a simple CNN model to perform a binary classification on cats and dogs. You will be provided with a dataset

For submission , upload the following :

- model summary by calling model.summary()

- The train vs val loss and accuracy plots

- The confusion matrix

- The classification report on test dataset

Task-2 - GradCAM and Occlusion

A cool task for the above model is to learn how different features are extracted by model in each layer . To do this we will using GradCAM and Occlusion methods.

GradCAM

GradCAM uses the gradients of the target class w.r.t. the feature maps of a CNN layer to compute importance weights. In this exercise we want you to generate visulaizations and write short notes on your understanding on how each layer contributes to the final classification result. You should also display overlay of heat maps of feature vectors on the image to support your understanding of the model’s behavior.

Occlusion

Occlusion ( occlusion sensitivity) is a simple but powerful interpretability technique for CNNs. Essentially, we make the model freak out by masking the input image but in specific small patches. You take an input image and systematically mask (occlude) different parts of it (e.g., by placing a gray or black square patch over a region). For each occluded version of the image, you run it through the CNN and record how much the model’s prediction score for the target class changes.

The idea:

If covering a region makes the score drop a lot, that region is important for the decision.

If covering it barely changes the score, it’s less relevant.

For submission you will require to submit the accuracy score with at least 3 different occlusion masks and heatmap plot for each mask

Task - 3 Fast Gradient Sign Method for Adversarial Attacks

Lets play a game of adversaries. Convolutional Neural Networks (CNNs) have achieved remarkable success in image classification tasks, from medical imaging to self-driving cars. However, CNNs are not invincible. Tiny, carefully crafted perturbations that are often imperceptible to the human eye, can cause a model to make confident but wrong predictions. This phenomenon is known as an adversarial attack.

One of the simplest and most famous attacks is the Fast Gradient Sign Method (FGSM), introduced by Goodfellow et al. (2015).

In this task we will use the same technique to check if the CNN classifier you developed in task-1 is vulnerable to adversarial attacks. You will need to use FGSM to create adversarial examples for the test dataset and check if the model is still able to make correct predictions.

For submission you will require to submit the accuracy score on the adversarial examples with at least 3 different epsilon values and display the adversarial images for each epsilon value.

Task - 4 Welcome to Similarity Search

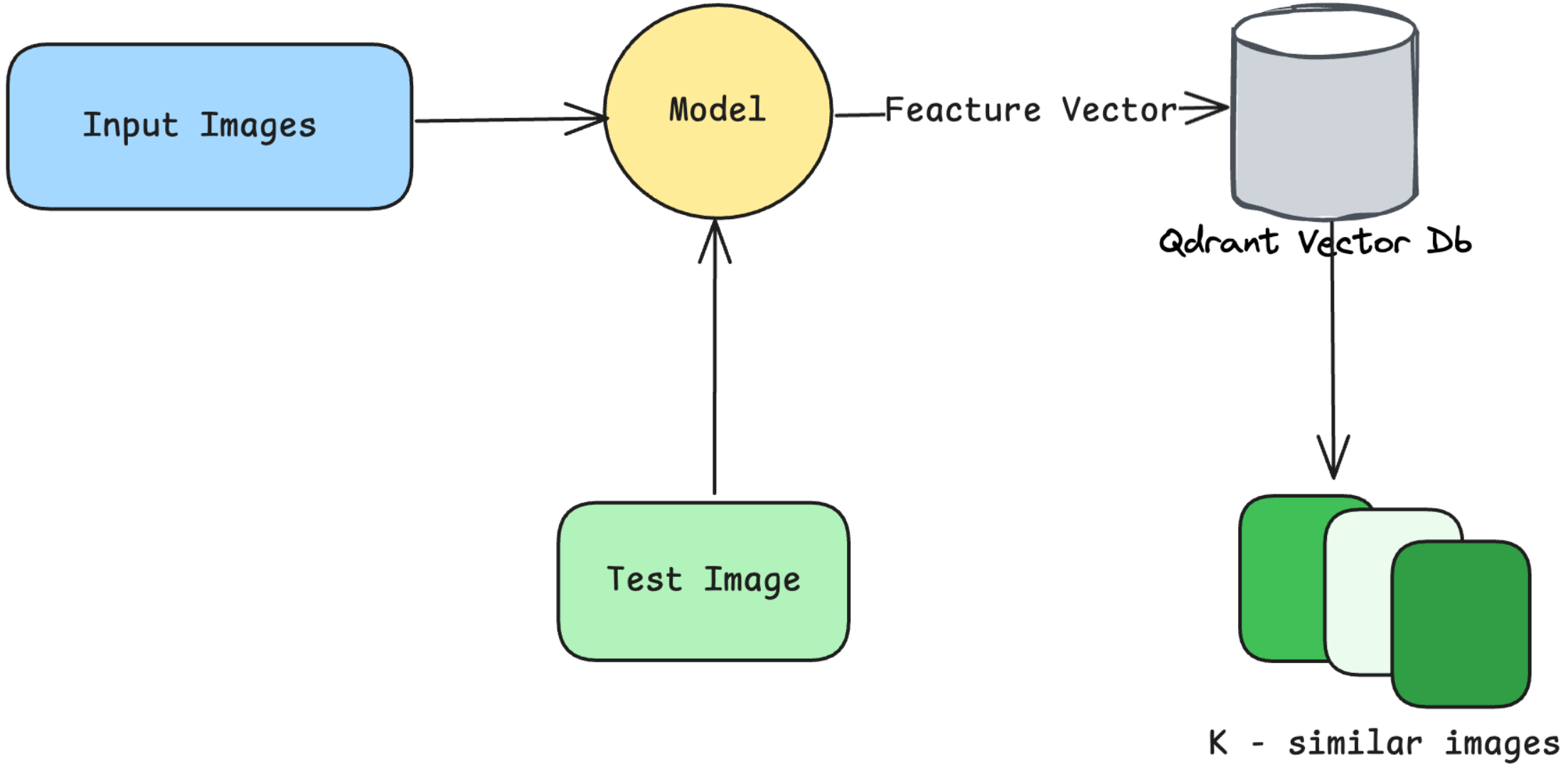

Big Techs usually do image search or any search in general using the principle of randomness and least sensitive hashing. Well in the class we have studied that CNN’s are one of the best feature extractors. My curious brains wonders if we can use this to build a similarity based search engine to do a particular task . My proposal is to re-use the CNN model in task-1, to extract the feature vectors from the images and use these feature vectors to build a similarity search engine. You will need to use a vectordb (like postgres with the pgvector extension or qdrant) to store these feature vectors. Once your feature vectors are ready , we want you to select 3 random images and retrieve the top-k similar images from the database. Please assign a number of your choice to the variable k . For every image that is retrieved display the retrieved image and its similarity score.

An example workflow is shown below:

References

https://arxiv.org/pdf/1412.6572.pdf

https://docs.pytorch.org/tutorials/beginner/fgsm_tutorial.html